Data Science - Platforms and Initiatives

Scientific computing infrastructure @ DFKZ

© Shutterstock

State-of-the art scientific computing infrastructure:

- Scientific compute cluster; ~3700 cores, ~31 pb disk in file storage ~6 pb in object storage

- GPU computing for machine learning, including the largest DGX-2 research installation nationally.

- Internal cloud solutions and federated networks, based on Redhat Openstack Platform (RHOSP)

Data science scientific projects and infrastructure initiatives

de.NBI cloud

The de.NBI cloud is a fully academic cloud, free of charge for academic users, where academic cloud centers provide storage and computing resources for locally stored data. All layers, i.e. hardware and personnel resources, IT administration and operation, as well as deployment of operating systems, frameworks and workflows are provided by the five local cloud centers.

The DKFZ is engaged in operating the de.NBI site HD-HuB involving DKFZ and Heidelberg University and BIH Berlin.

More details: de.NBI cloud

DKTK Joint Imaging Platform

The Joint Imaging Platform ( JIP ) is a strategic initiative within the German Cancer Consortium (DKTK) and developed at the DKFZ. The aim is to establish a technical infrastructure that enables modern and distributed imaging research within the consortium. The main focus is on the use of modern machine learning methods in medical image processing. It strengthens collaborations between the participating clinical sites and support multicenter trails.

More details: DKTK Joint Imaging Platform

Federated Cancer Networks

Within DKTK, the Clinical Communication Platform (CCP) acts as a data bridge between basic research and clinical practice. Its software platform (CCP-IT) interconnects so-called Bridgeheads to form an information hub for cancer research enabling researchers to access biological samples and patient data from any DKTK site and to assess the feasibility of clinical trials while complying with strict data protection standards. Another key focus of the CCP-IT is the development of new quality assurance standards for clinical data.

In two projects funded by the German Cancer Aid, the CCP-IT is being joined by 6 additional oncological centers of excellence (CCC) in the context of the C4 project ("Connecting Comprehensive Cancer Centers"). The combined platform, consisting of all academic German Oncology Centers of Excellence, also serves to interconnect the 15 centers of the national Network Genomic Medicine Lung Cancer (nNGM) to implement nationwide comprehensive molecular diagnostics and personalized therapy for patients with advanced lung cancer.

More information:

The German Biobank Alliance

Biomedical research depends on high-quality, well-annotated biomaterial, which can often not be supplied by a single institution. To this end, 11 German biobanks joined forces to form the German Biobank Alliance (GBA), paving the way for sharing biological samples and associated clinical data within Germany and across Europe, by forming the German Biobank Node in the European BBMRI-ERIC. This BMBF-funded pioneering joint venture makes use of the "Bridgehead" software developed to share clinical data within the German Cancer Consortium (DKTK) while complying with Germany's stringent data protection standards. The German Cancer Research Center in Heidelberg is the center of expertise in charge of coordinating the IT for the alliance.

More information:

NFDI

In the German National Research Data Infrastructure (NFDI), valuable data from science and research are systematically accessed, networked and made usable in a sustainable and qualitative manner for the entire German science system. The NFDI aims to create a permanent digital repository of knowledge as an indispensable prerequisite for new research questions, findings and innovations. Relevant data should be made available according to the FAIR principles (Findable, Accessible, Interoperable and Reusable).

Three NFDI Consortia are based at the DKFZ:

The DKFZ participates in the consortium NFDI4BIOIMAGE which is part of the National Research Data Infrastructure (Nationale Forschungsdateninfrastruktur, NFDI). The consortium specializes in research data management for microscopy and bioimage analysis, and it is coordinated by the Heinrich-Heine-University Düsseldorf in close collaboration with the DKFZ;

Prof. Elisa May, Chief Enabling Technology Officer at the DKFZ, acts as the deputy speaker of NFDI4BIOIMAGE and coordinates the consortium's work program with international data management activities in bioimaging.

Dr. Jan-Philipp Mallm, Head of the Single-cell Open Lab at the DKFZ, co-leads the Task Area on multimodal data integration with a specific focus on spatial transcriptomics, a set of methods combining microscopy and genome sequencing data.

The Information Infrastructure for BioImage Data (I3D:bio) constitutes an independent, DFG-funded partner project of NFDI4BIOIMAGE with a specific focus on leveraging the image data management system OMERO (OME Remote Objects) for centralized bioimage data storage and metadata annotation. In the course of the project, OMERO will be implemented at the DKFZ.

More information:

https://nfdi4bioimage.de

https://www.i3dbio.de

Contact at the DKFZ: Dr. Christian Schmidt, project coordinator (email: christian01.schmidt@dkfz-heidleberg.de)

protects vertebrate hosts from infections and cancer. However, failures in its

regulation can cause autoimmunity, allergy, immunodeficiencies and

malignancies. To understand the mechanisms underlying these processes and how

they can be manipulated for the benefit of humans and animals, immunologists

use a wide range of experimental methods. The efficient handling of thereby

generated data, so that they meet the scientific demands for reproducibility

and reusability, is currently one of the key challenges for immunological

research.

As one of the 26 NFDI (https://www.nfdi.de) consortia, NFDI4Immuno aims to

initiate and shape the necessary transformation process together with its

community. DKFZ and its 14 partner institutions (nine co-applicants and five

participants) ensure the broad anchoring in the scientific community as a whole

as well as in the respective thematic domains.

More information: NFDI4Immuno

GHGA is funded by the DFG as part of the German national program for research data infrastructures - the NFDI e.V.. As a national node of the federated European Genome Phenome Archive (EGA), GHGA will follow national regulations on data protection and at the same time be closely linked to international data infrastructures.

Coordinated by the German Cancer Research Center (DKFZ), GHGA has 46 participants from 21 institutions. Prof. Oliver Stegle, division head at the DKFZ, serves as the current speaker of GHGA.

More information: www.ghga.de

More details: https://www.nfdi.de

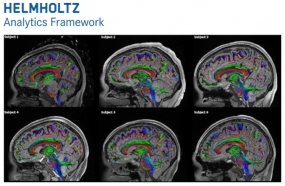

HAF - Helmholtz Analytics Framework

The Helmholtz Analytics Framework is a data science pilot project funded by the Helmholtz Initiative and Networking Fund. Six Helmholtz centers (DLR, KIT, FZ Jülich, DESY, DKFZ und HMGU) will pursue a systematic development of domain-specific data analysis techniques in a co-design approach between domain scientists and information experts in order to strengthen the development of the data sciences in the Helmholtz Association. In challenging applications from a variety of scientific fields, data analytics methods will be applied to demonstrate their potential in leading to scientific breakthroughs and new knowledge. In addition, the exchange of methods among the scientific areas will lead to their generalization.

The DKFZ is involved in the use case "high-throughput image-based cohort phenotyping".

More details: Helmholtz Analytics Framework

HDF - Helmholtz Data Federation

The Helmholtz Data Federation HDF is a strategic initiative of the Helmholtz Association addressing one of the great challenges of the next decade: Dealing with the avalanche of data created in science, in particular by the large research infrastructures of the Helmholtz Centers.

More details: Helmholtz Data Federation

HIDSS4Health

Due to increasingly complex analytical and diagnostic methods in medical research and in the clinic, data processing in health care is becoming more and more important. To meet this future trend, leading scientists of the Karlsruhe Institut of Technology (KIT), the German Cancer Research Center (DKFZ) and the University of Heidelberg (UHEI) in the field of data science and health have joined forces to form an interdisciplinary network to train young talents at the interface between data science and health and to prepare them for their future work as leaders in science and industry. Within an international doctoral programm, the Helmholtz Information & Data Science School for Health (HIDSS4Health), young people are specially trained in the research areas Imaging & Diagnostics, Surgery & Intervention 4.0 and Models for Personalized Medicine.

More details: HIDSS4Health

HIFIS - Helmholtz Federated IT Services

HIFIS aims to ensure an excellent information environment for outstanding research in all Helmholtz research fields and a seamless and performant IT-infrastructure connecting knowledge from all centres. It will build a secure and easy-to-use collaborative environment with efficiently accessible ICT services from anywhere. HIFIS will also support the development of research software with a high level of quality, visibility and sustainability.

The DKFZ engages in all 3 Competence Clusters.

More details: Helmholtz Federated IT Services

HIP - Helmholtz Imaging Platform

Images are ubiquitous in society. The same holds true for science. An increasingly large proportion of data and information are collected and processed in the form of images. New technologies and algorithms help rapidly handle and analyze these images. The Helmholtz Association has therefore adopted an innovative approach in order to systematically support and further develop imaging processes and methods of analysis: the Helmholtz Imaging Platform. Under the lead of the German Electron Synchrotron (DESY), the German Cancer Research Center (DKFZ) and the Max-Delbrück-Centrum (MDC), the Helmholtz Imaging Platform will include research into imaging processes, provision of advisory services in the field of imaging processes, and access to imaging modalities and image data. HIP will not only work on the technical and methodological aspects of the research area, it will also develop a network for exchanging ideas and developing expertise.

HMC – Helmholtz Metadata Collaboration

Research data is suitable for re-use under scientific quality standards if it fulfills the following requirements: It contains a description of the type and organization of the data, information on their occurrence and information that allows conclusions to be drawn on the precision and quality of the data.

The collection of all these attributes describing a record defines its own record called metadata. In times of digitization and big data analytics, the importance of metadata goes beyond the qualifying descriptive function. Metadata must be searchable and automatically available for evaluation, because only then can the data records described by them be made searchable and accessible for subsequent use.

Today, the professional, efficient and forward-looking capture of metadata often fails due to the extra work required by appropriate organization and storage of the metadata, taking into account diverse, dynamically changing, standards. The possibilities of digitization already allow these processes to be supported by suitable technologies and data services.

The Helmholtz Metadata Collaboration (HMC) Platform aims at fostering the enrichment of research data with metadata, and implements supporting services for all domains by consolidating the expertise of the six Helmholtz research areas in Metadata Hubs. It introduces the subject metadata to the stake-holders, highlights the importance of metadata within research data management, offers advice, and facilitates sustainable infrastructure services for the storage, reuse and international exchange of metadata.

More details: HMC – Helmholtz Metadata Collaboration

HMSP - Helmholtz Medical Security, Privacy, and AI Research Center

The Helmholtz Medical Security, Privacy and AI Research Center (HMSP) constitutes a joint initiative of six Helmholtz Centers – CISPA, DZNE, DKFZ, HMGU, HZI, and MDC – that brings together leading experts from the field of IT-security, privacy and AI/machine learning as well as the medical domain to enable secure and privacy-preserving processing of medical data using next-generation technologies. It aims for scientific breakthroughs at the intersection of security, privacy and AI/machine learning with medicine, and it aims to develop enabling technology that provides new forms of efficient medical analytics while offering trustworthy security and privacy guarantees to patients as well as compliance with today's and future legislative regulations.

More details: HMSP - Helmholtz Medical Security, Privacy, and AI Research Center

INFORM

Although current treatment of childhood malignancies results in overall cure rates in the order of 75% with modern multi-modal protocols, relapse from high risk disease remains a tremendous clinical problem. This medical need is being addressed with INFORM „INdividualized Therapy FOr Relapsed Malignancies in Childhood". State-of-the-art next-generation sequencing technologies are used to generate a molecular "fingerprint" of each individual tumor, which is complemented by drug sensitivity profiles. An expert panel of experienced pediatric oncologists, biologists, bioinformaticians and pharmacologists classifies and weighs the aberrations/targets found for each single patient according to clinical relevance.

In INFORM we work on the identification and classification of genetic and epigenetic changes in childhood tumors using computational methods and the DKFZ Compute Infrastructure. This work enables us to generate personalized molecular tumor profiles that allow physicians to design a tailored therapy approach for each individual patient. In order to interpret, integrate and visualize the vast amount of molecular biological data, we use state-of-the-art computer-assisted methods to understand the genetic and epigenetic diversity of childhood cancer and to provide accurate molecular diagnosis and personalized therapies. We have analyzed over 1000 patients since its initiation.

More details: INFORM

NCT DataThereHouse (NCT DTH)

The NCT DataThereHouse (NCT DTH) is a strategic initiative of the National Center for Tumor Diseases Heidelberg (NCT) in which the German Cancer Research Center (DKFZ) and the Heidelberg University Hospital (UKHD) have joined forces in order to simultaneously guarantee best patient care and best translational research. For translational research in oncology access to patient related data is crucial.

The NCT DTH as the central Data Warehouse at the NCT forms the basis to provide these data generated in clinical routine, clinical trials and basic science to each clinical and basic researcher at the NCT and to share data across different sites or to work on common questions in multi-centered projects. The concept entails search abilities for all data points with field-by-field integrity and access management, integrating data from diverse sources such as the Heidelberg Hospital Information System, NCT Cancer Registry, NCT Biobank, the Laboratory information management system, and the Picture Archiving and Communication System. This way, the NCT DTH project will facilitate the direct link between the medical and the scientific world and allows the integration of knowledge databases and the exchange of data in existing and future collaborations.

Especially in precision medicine the impact of analyzing data from a large number of patients together at the same time is enormous. With the increasing influence of molecular information about oncological diseases and the technical possibilities to generate molecular data with increasing velocity the variety of difficult to compare patient profiles rises at an unprecedented speed and demands solution from computer scientists. The Division of Medical Informatics for Translational Oncology (MITRO) at DKFZ has started to use such heterogeneous patient profiles in analyses that apply machine learning methods for a wide range of applications. One application is diagnosis and patient categorization by molecular stratification. Another is the prediction of patient trajectories with regards to prognosis and treatment, including tasks such as drug response prediction, survival prediction and recurrence prediction. By checking the contribution of particular molecular features to the outputs of the prediction models, hypotheses for fundamental biological study can be generated.

More details:

Re-Usable tools for Data Protection in Medical Research

Distributed medical research is facing increasingly strict requirements regarding data privacy and data security. In order to support researchers with these issues, the TMF e.V. introduced generic data privacy blueprints, which enjoy the recommendation by the data protection officers by all German federal states. While these concepts greatly facilitate the writing of data protection concepts, their implementation in IT requires highly

Funded by the DFG, the DKFZ-coordinated MAGIC consortium has evaluated and further developed open-source software to establish data privacy-compliant IT infrastructures. These reusable and interoperable IT components are widely used to interchange patient data in a privacy and security-compliant manner and are made available to the scientific community as open source tools.

More information:

TFDA - Trustworthy Federated Data Analytics

The "Trustworthy Federated Data Analytics" (TFDA) project is funded by the Helmholtz Initiative and Networking Fund and jointly conducted by the Helmholtz centres CISPA and DKFZ. In this interdisciplinary project we aim at facilitating the implementation of decentralized, cooperative data analytics architectures within and beyond Helmholtz. Thus providing solutions for the continuously growing data demand in the domain of machine learning and therefore help to solve future grand challenges. TFDA will facilitate bringing the algorithms to the data in a trustworthy and regulatory compliant way instead of going a data-centric way. Therefore TFDA will address the technical, methodical and legal aspects when ensuring trustworthiness of analysis and transparency regarding the analysis in- and outputs without violating privacy constraints. To demonstrate applicability and to ensure the adaptability of the methodological concepts, we will validate our developments in the use case "Federated radiation therapy study".